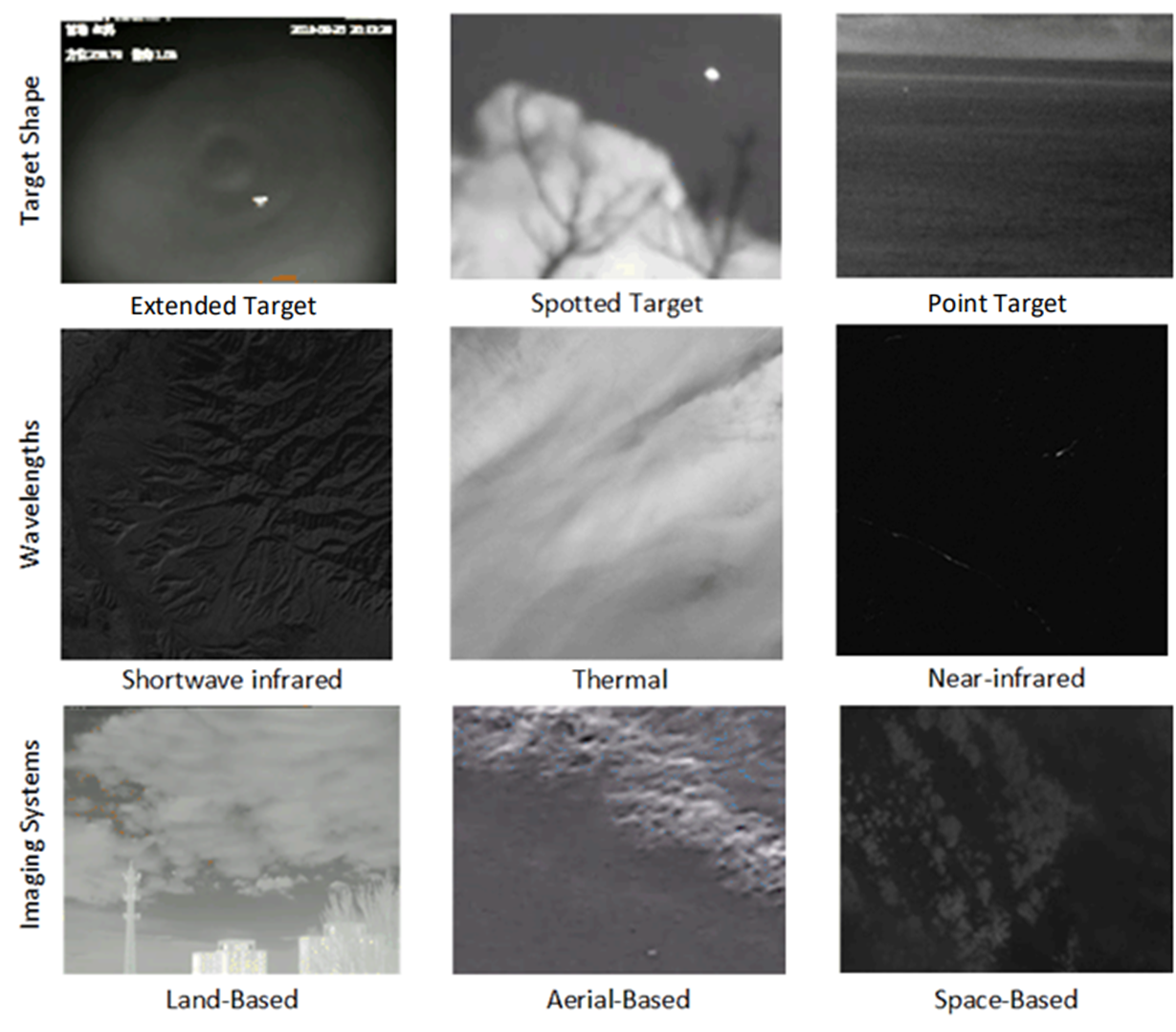

Datasets

Download:

Dataset for Track 1 is released in Baidu Drive and OneDrive.

Dataset for Track 2 is released in Baidu Drive and OneDrive.

Evaluation Metrics

Baseline Model

l Baseline model results for test A (60% of test results):

| Model | IoU | Pd | Fa | Score |

|---|---|---|---|---|

| DNANet_full (full supervision) | 37.478 | 68.637 | 5.019e-6 | 51.558 |

| DNANet_LESPS_coarse (weak supervision) | 27.766 | 64.294 | 2.153e-5 | 46.030 |

l Baseline model results for test B (100% of test results):

| Model | IoU | Pd | Fa | Score |

|---|---|---|---|---|

| DNANet_full (full supervision) | 40.773 | 68.588 | 4.915e-6 | 54.681 |

| DNANet_LESPS_coarse (weak supervision) | 29.266 | 63.636 | 2.294e-5 | 46.451 |

l Baseline model training and testing code: https://github.com/XinyiYing/LESPS

l Baseline model training and test result file: Baidu Drive , Ondirve

l Baseline model training and test results:

| Model | IoU | Pd | Fa | Params | FLOPs | Score |

|---|---|---|---|---|---|---|

| UNet | 62 | 59 | 1.5e-05 | 0.9M | 5.08G | 60 |

l Baseline model training and testing code: https://github.com/YeRen123455/ICPR-Track2-LightWeight

l Baseline model training and test result file: Baidu Drive , Ondirve